1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

|

<div align="center">

<img src="https://nixery.dev/nixery-logo.png">

</div>

-----------------

[](https://buildkite.com/tvl/depot)

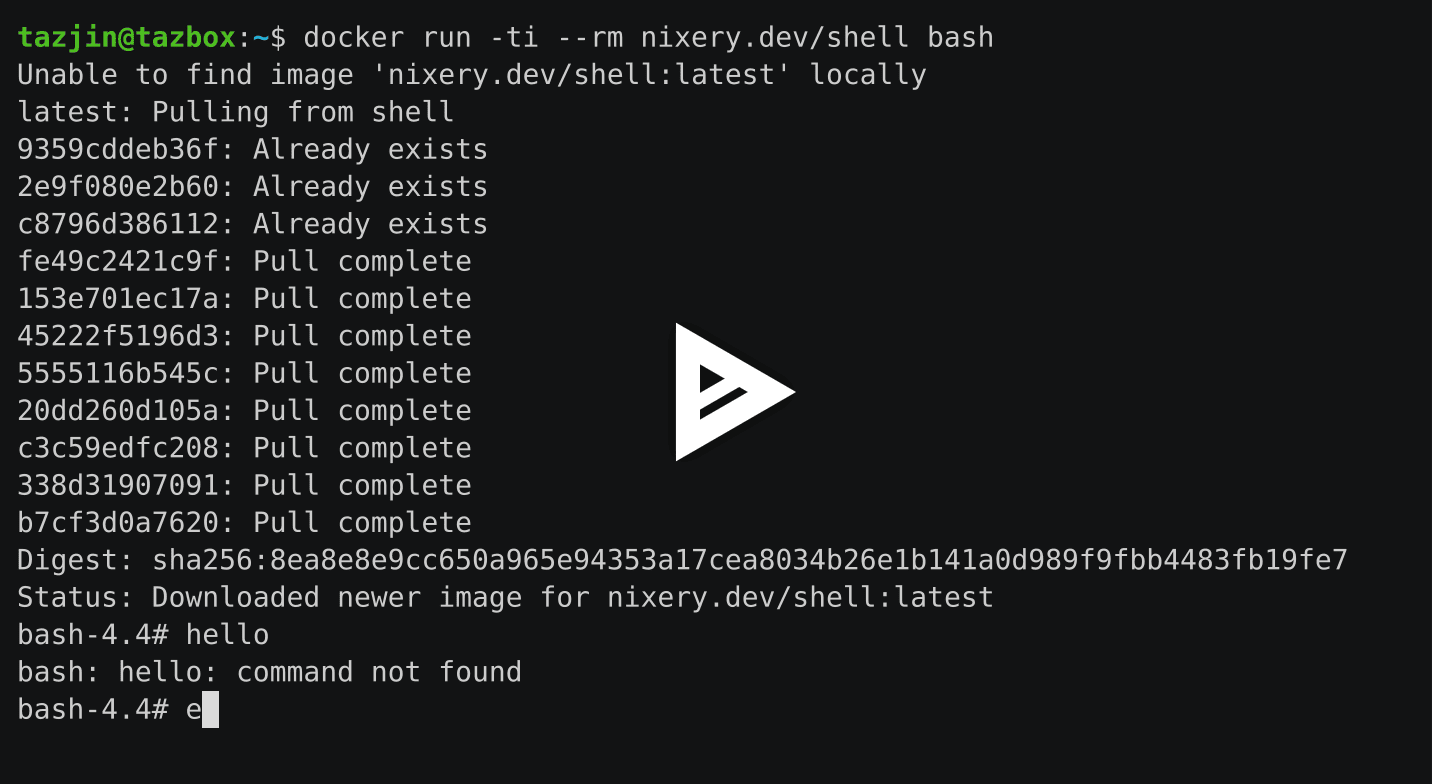

**Nixery** is a Docker-compatible container registry that is capable of

transparently building and serving container images using [Nix][].

Images are built on-demand based on the *image name*. Every package that the

user intends to include in the image is specified as a path component of the

image name.

The path components refer to top-level keys in `nixpkgs` and are used to build a

container image using a [layering strategy][] that optimises for caching popular

and/or large dependencies.

A public instance as well as additional documentation is available at

[nixery.dev][public].

You can watch the NixCon 2019 [talk about

Nixery](https://www.youtube.com/watch?v=pOI9H4oeXqA) for more information about

the project and its use-cases.

The canonical location of the Nixery source code is

[`//tools/nixery`][depot-link] in the [TVL](https://tvl.fyi)

monorepository. If cloning the entire repository is not desirable, the

Nixery subtree can be cloned like this:

git clone https://code.tvl.fyi/depot.git:/tools/nixery.git

The subtree is infrequently mirrored to `tazjin/nixery` on Github.

## Demo

Click the image to see an example in which an image containing an interactive

shell and GNU `hello` is downloaded.

[](https://asciinema.org/a/262583?autoplay=1)

To try it yourself, head to [nixery.dev][public]!

The special meta-package `shell` provides an image base with many core

components (such as `bash` and `coreutils`) that users commonly expect in

interactive images.

## Feature overview

* Serve container images on-demand using image names as content specifications

Specify package names as path components and Nixery will create images, using

the most efficient caching strategy it can to share data between different

images.

* Use private package sets from various sources

In addition to building images from the publicly available Nix/NixOS channels,

a private Nixery instance can be configured to serve images built from a

package set hosted in a custom git repository or filesystem path.

When using this feature with custom git repositories, Nixery will forward the

specified image tags as git references.

For example, if a company used a custom repository overlaying their packages

on the Nix package set, images could be built from a git tag `release-v2`:

`docker pull nixery.thecompany.website/custom-service:release-v2`

* Efficient serving of image layers from Google Cloud Storage

After building an image, Nixery stores all of its layers in a GCS bucket and

forwards requests to retrieve layers to the bucket. This enables efficient

serving of layers, as well as sharing of image layers between redundant

instances.

## Configuration

Nixery supports the following configuration options, provided via environment

variables:

* `PORT`: HTTP port on which Nixery should listen

* `NIXERY_CHANNEL`: The name of a Nix/NixOS channel to use for building

* `NIXERY_PKGS_REPO`: URL of a git repository containing a package set (uses

locally configured SSH/git credentials)

* `NIXERY_PKGS_PATH`: A local filesystem path containing a Nix package set to

use for building

* `NIXERY_STORAGE_BACKEND`: The type of backend storage to use, currently

supported values are `gcs` (Google Cloud Storage) and `filesystem`.

For each of these additional backend configuration is necessary, see the

[storage section](#storage) for details.

* `NIX_TIMEOUT`: Number of seconds that any Nix builder is allowed to run

(defaults to 60)

* `NIX_POPULARITY_URL`: URL to a file containing popularity data for

the package set (see `popcount/`)

If the `GOOGLE_APPLICATION_CREDENTIALS` environment variable is set to a service

account key, Nixery will also use this key to create [signed URLs][] for layers

in the storage bucket. This makes it possible to serve layers from a bucket

without having to make them publicly available.

In case the `GOOGLE_APPLICATION_CREDENTIALS` environment variable is not set, a

redirect to storage.googleapis.com is issued, which means the underlying bucket

objects need to be publicly accessible.

### Storage

Nixery supports multiple different storage backends in which its build cache and

image layers are kept, and from which they are served.

Currently the available storage backends are Google Cloud Storage and the local

file system.

In the GCS case, images are served by redirecting clients to the storage bucket.

Layers stored on the filesystem are served straight from the local disk.

These extra configuration variables must be set to configure storage backends:

* `GCS_BUCKET`: Name of the Google Cloud Storage bucket to use (**required** for

`gcs`)

* `GOOGLE_APPLICATION_CREDENTIALS`: Path to a GCP service account JSON key

(**optional** for `gcs`)

* `STORAGE_PATH`: Path to a folder in which to store and from which to serve

data (**required** for `filesystem`)

### Background

The project started out inspired by the [buildLayeredImage][] blog post with the

intention of becoming a Kubernetes controller that can serve declarative image

specifications specified in CRDs as container images. The design for this was

outlined in [a public gist][gist].

## Roadmap

### Kubernetes integration

It should be trivial to deploy Nixery inside of a Kubernetes cluster with

correct caching behaviour, addressing and so on.

See [issue #4](https://github.com/tazjin/nixery/issues/4).

### Nix-native builder

The image building and layering functionality of Nixery will be extracted into a

separate Nix function, which will make it possible to build images directly in

Nix builds.

[Nix]: https://nixos.org/

[layering strategy]: https://tazj.in/blog/nixery-layers

[gist]: https://gist.github.com/tazjin/08f3d37073b3590aacac424303e6f745

[buildLayeredImage]: https://grahamc.com/blog/nix-and-layered-docker-images

[public]: https://nixery.dev

[depot-link]: https://cs.tvl.fyi/depot/-/tree/tools/nixery

[gcs]: https://cloud.google.com/storage/

|